Google has made a significant change: you now need to enable JavaScript to use Google Search.

According to them, this move is about security and improving the experience for users like you and me. By requiring JavaScript, Google says it can better protect its platform against spam, bots, and other types of abuse. It also claims this change ensures you get “the most relevant and up-to-date information.” 1

This is what you’ll see if you try to go to Google Search with JavaScript disabled even today:

But let’s be clear – this isn’t a small adjustment. Google uses JavaScript for many of its features. If you’ve ever noticed how it suggests queries while you type or shows tailored results, that’s JavaScript at work.

Why do I even mention this?

Google says that fewer than 0.1% of searches come from browsers with JavaScript turned off. While that sounds tiny, remember how big Google is. Google supports roughly 8.5 billion daily searches. That tiny slice is still millions of people – think no-script browsers, privacy-focused users, etc.

Then there’s the question of accessibility. JavaScript-heavy websites can be a nightmare for certain tools like screen readers. If you rely on these tools, this change could make using Google Search much harder.

It feels ironic, doesn’t it? A move supposedly designed to improve the experience might end up leaving some users behind.

In the end, if you’re someone who browses with JavaScript disabled, either for privacy or security reasons, this change forces a choice: turn it back on or stop using Google Search.

Though, that’s just one part of the story. There’s a bigger game being played here:

User concerns with Google’s policy

Google positions JavaScript as a hero in the fight against malicious scraping and spam. But critics point out that JavaScript itself can be a security headache.

Here’s what Google claims: with JavaScript enabled, they can roll out better tools to fight bots and abuse. These tools include advanced methods like rate-limiting and CAPTCHAs, which rely on JavaScript to work. On the surface, it sounds reasonable.

But let’s talk about what critics are saying. While Google focuses on security, JavaScript itself isn’t exactly risk-free.

For instance, Claranet discovered 1000+ instances of outdated JavaScript libraries across multiple web applications tested in 2024. These outdated libraries were linked to vulnerabilities such as Cross-Site Scripting (XSS), Denial of Service (DoS) attacks, and sensitive information disclosure. This highlights a significant security risk in JavaScript ecosystems actually being used out there. 2

More to the point, Reflectiz emphasized in another piece that JavaScript remains highly vulnerable, particularly due to risks like, you’ve guessed it, Cross-Site Scripting and Man-in-the-Middle (MITM) attacks. These vulnerabilities often come from insecure third-party libraries or frameworks, which can introduce malicious code into applications. 3

So does this mean preventing bot traffic is more important to Google than user security? I’ll let you answer that one, but let me tell you this: I read through a number of threads on the web about this new change (both English and Polish ones) and none of them have any positive comments underneath. 🤷♂️

What’s really going on?

I don’t want to sound overly suspicious (I totally do), but the timing and execution of this change have made people wonder if there’s more to the story.

Is this only about security, or is Google tightening its grip on how people interact with its search engine?

Whatever the motive, one thing is clear: this change affects more than just a “tiny fraction” of users. Case in point: SEO tools.

If you work with any SEO tools or depend on them to track your rankings, Google’s JavaScript requirement might make your work a lot harder. Many popular solutions have started running into serious problems, and it’s not a coincidence.

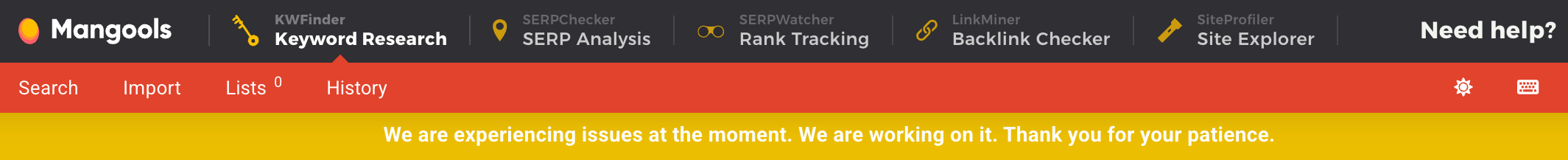

Here’s a screenshot from Mangools:

There have been similar issues with other SEO tools out there. The developers are likely working round the clock to find workarounds, and Twitter is full of updates about this. Some tools have been coping well (like Semrush) – especially if they’ve depended on third-party data sources more. But some have a much rockier road ahead of them if they’ve been getting data from Google directly.

Here’s the problem: scraping Google’s search results has become much harder. Most SEO tools rely on scraping live search results from Google. And since that is getting more difficult to do by the minute, they’re experiencing delays or even outages.

What’s causing this? For one, as much as I understand the mechanism of all this, a simple HTTP request does the job much faster than having to render a page with JavaScript enabled. On top of that, Google is likely deploying extra roadblocks like rate-limiting, IP blocking, and CAPTCHAs.

In short, scraping Google Search is no longer just technical – it’s expensive. SEO companies might need to invest in headless browsers, proxy servers, and more powerful infrastructure just to keep up. You can probably guess what that means for end users: higher subscription costs.

And, let’s not forget, Google hasn’t exactly been a fan of anything with “SEO” in its name. So SEO tools being down is not even on their todo list of problems to solve.

Is this all about security?

On the surface, Google says it’s about protecting the platform from bots and spam. But it’s hard not to wonder if there’s another motive at play. After all, this shift conveniently makes it harder for anyone to scrape Google’s data, and this is kind of confirmed at this point.

Think about it. Google dominates the search engine market, and the data it produces is incredibly valuable. If it suddenly becomes more difficult (and costly) for third parties to access that data, Google ends up with even more control.

If you’re an SEO professional or you use rank-checking tools, you’ll probably feel the impact of this change sooner rather than later. And while the big companies might eventually adapt, smaller players could struggle, or even shut down. This feels like a tipping point. And whether you see that as progress or a power grab depends on your perspective.

More context on user security vs JavaScript

If you’re someone who disables JavaScript for privacy or security reasons, Google’s new requirement might feel like a dealbreaker. And honestly, I can see why.

Some people deliberately block JavaScript, using tools like NoScript or browsing with Tor. For them, this isn’t just a preference but a way to stay safer online. Disabling JavaScript can reduce your exposure to potential vulnerabilities and tracking scripts. JavaScript is a powerful tool, but its ecosystem is enormous and includes countless third-party libraries. And those libraries just aren’t always secure.

To put it in perspective, remember the research I mentioned earlier – about JavaScript being the source of multiple vulnerabilities due to third-party libraries. That’s not just a hypothetical risk – that’s a real, ongoing problem.

Now, Google insists that their JavaScript environment is secure. But even if they’ve done everything right on their end, forcing everyone to enable JavaScript broadens the attack surface. It’s like asking you to open more doors and just hoping none of them lead to trouble.

Where does this leave users?

So, what happens next? Here’s what I see:

- Costs: SEO tool providers may hike prices to cover the more expensive “headless” scraping and to pivot around Google’s new defenses.

- User experience: Millions of JavaScript-disabled users are forced to switch it on – or switch search engines.

- Data and analytics: Marketers and agencies that rely on daily rank-tracking might see less frequent or patchier SERP insights.

- Google’s grip: With more control over how its SERPs get accessed, Google potentially solidifies its hold on what info is available, how it’s delivered, and at what price – at least for third-party data miners.

If you’re a user who disables JavaScript to stay safe, Google’s change might feel like a step backward. Of course…you can always use an alternative instead.

Bottom line

Google’s hardline stance on JavaScript is a double shot of security measure and SERP lockdown. While they vow it’s all about keeping bad actors at bay, everyday users who disable JS are definitely feeling the heat.

This change affects everyone – from privacy-conscious users to SEO professionals – and the ripple effects are already being felt.

If you’re committed to a script-free browsing life, you might have to find a new search bestie or change your ways and enable JS.

I’ll be honest – there’s no simple answer. Both sides have valid points. Google is dealing with real challenges from bots and abuse. But by forcing everyone to use JavaScript, they’re also consolidating power in a way that feels hard to ignore.

…

Don’t forget to join our crash course on speeding up your WordPress site. Learn more below:

Or start the conversation in our Facebook group for WordPress professionals. Find answers, share tips, and get help from other WordPress experts. Join now (it’s free)!