Want to know how to block an unwanted web crawler from your site?

While legitimate web crawlers—like those from search engines—are essential for indexing your site and improving visibility in search results, unwanted crawler bots can wreak havoc by consuming bandwidth, scraping content, and posing security risks.

In this guide, we’ll explore how to block unwanted crawlers from your site to protect your data, enhance performance, and safeguard your SEO efforts.

I’ll show you the easiest way to manage website crawlers on your site—and you don’t even need any technical knowledge to implement this.

Bonus: It only takes a few clicks!

What Is a Web Crawler?

A web crawler, also known as a bot or spider, is an automated program that systematically browses the internet to index and collect information from websites. These crawler bots are crucial for search engines to understand and rank websites appropriately. Types of web crawlers include:

- Search engine crawlers: Index websites to display in search results.

- Data scrapers: Extract information, often for unauthorized use.

- Malicious bots: Perform harmful activities, such as spamming or hacking attempts.

- AI Bots: Crawl your site and use your content without authorization.

It’s because of the harmful type that you need to have full control of which crawlers have access to your site.

How Crawlers Work

Web crawlers navigate your site by following links from one page to another. They start with a list of URLs, often provided via an XML sitemap, which is a roadmap of your website’s structure. Crawlers use this sitemap to index your pages efficiently.

The good news is that you can communicate with web crawlers using a robots.txt file. This plain text file provides instructions on which pages or directories to crawl or avoid. However, not all bots respect these instructions, especially malicious ones.

Why Block an Unwanted Web Crawler?

So, why should you invest time, energy, and resources to block unwanted crawlers?

Let me give you my top 4 reasons.

1. Security Concerns

Unwanted web crawlers can pose significant security risks. They might attempt to access sensitive data, exploit vulnerabilities, or inject malicious code into your website.

2. Performance Issues

Excessive crawling can overload your server, leading to slow website performance or even downtime. This can negatively impact user experience and your site’s reputation.

3. Privacy Protection

By blocking unauthorized bots, you prevent them from accessing and potentially exposing confidential information or proprietary data.

4. SEO Impact

Malicious crawlers might duplicate your content elsewhere, leading to duplicate content penalties from search engines. They can also misuse your content, affecting your search rankings and brand credibility.

How to Block an Unwanted Web Crawler: The Super-Easy Way

Now that you know the dangers unwanted crawlers can pose, let’s quickly dive into how to block them from messing with your site.

Step 1: Install AIOSEO

The first step to darling with an unwanted web crawler is to install the All in One SEO (AIOSEO) plugin on your WordPress site.

AIOSEO is the best WordPress SEO plugin on the market. Over 3 million savvy website owners and marketers trust it to help them dominate the search engine results pages (SERPs) and drive relevant site traffic.

The plugin has many powerful SEO features and modules to help you optimize your site for search engines and users, even without coding or technical knowledge. AIOSEO is a powerful tool that simplifies SEO management and provides advanced features to control crawler access.

Regarding the latter, AIOSEO has an advanced feature called Crawl Cleanup. But more about this in a moment.

Need step-by-step instructions on how to install AIOSEO?

Then, check our detailed installation guide.

Step 2: Open Crawl Cleanup

In your WordPress dashboard, navigate to AIOSEO » Search Appearance » Advanced.

Next, scroll down to the Crawl Cleanup toggle and ensure it’s set to “On”.

This feature allows you to manage how search engines and bots interact with your website effortlessly.

Crawl Cleanup has many settings to help you optimize your site’s crawlability and performance. Examples include settings for:

Crawl Cleanup is a must-have tool in any SEO plugin, as it helps you control bot access, reduce server load, and improve site performance.

In our case, however, we want the Unwanted Bots option.

Step 3: Select the Web Crawler You Want to Block

In the Unwanted Bots section, you’ll find a list of known bots and crawlers.

Simply check the boxes next to the unwanted bots you want to block.

Alternatively, you can:

- Block all unwanted bots, giving you more control over your crawl budget.

- Primarily target AI crawlers, preventing them from indexing your content without permission.

When you do this, AIOSEO will automatically update your robots.txt file and apply the necessary settings to prevent those unwanted bots from accessing your site.

Step 4: Edit robots.txt (Optional)

Want more advanced control over managing unwanted web crawlers?

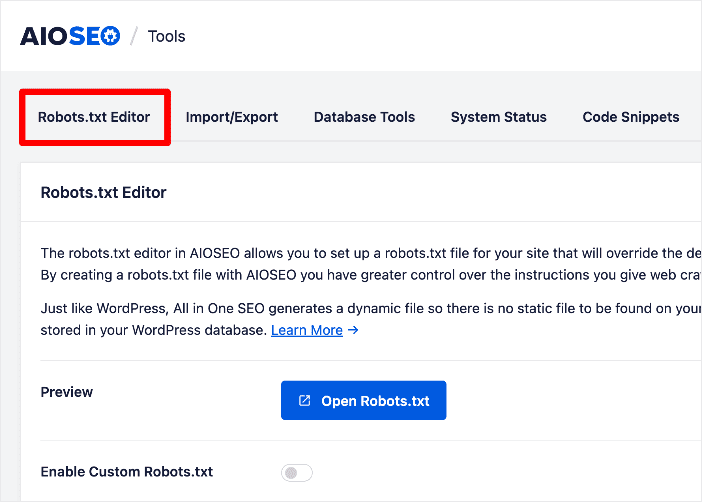

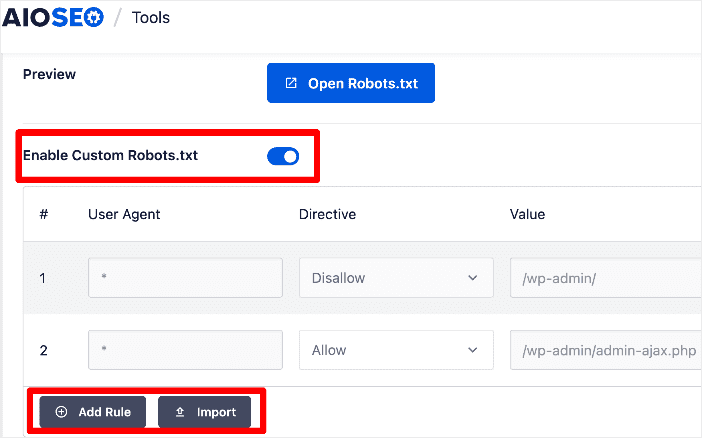

You can also consider setting crawl directives in your site’s robots.txt file directly within AIOSEO. To do so, go to the All in One SEO menu » Tools » Robots.txt Editor.

The robots.txt file tells bots which parts of your site they can or cannot access. You can add directives to disallow bots from accessing certain directories or files.

You can even import directives from another site, making it very easy to apply directives from one of your other sites or a site you like.

By using AIOSEO’s Robots.txt Editor, you can customize your robot instructions without dealing with complex code.

How to Block an Unwanted Web Crawler: Your FAQs Answered

How do I stop web crawlers from accessing my entire website?

The best way to stop unwanted crawlers from accessing your site is to use AIOSEO’s Crawl Cleanup feature. You can protect your site from unauthorized crawling with just a few clicks of a button.

How do I stop a Google crawler?

If you need to block Googlebot, add the following to your robots.txt file:

User-agent: Googlebot

Disallow: /

Alternatively, if you want to block Google from specific pages, use the Robots Meta Tags feature in AIOSEO to set noindex directives on those pages.

What file is used to stop unwanted web crawlers on a website?

The robots.txt file is the primary means of communicating with crawlers. Additionally, you can use robots meta tags within individual pages to instruct crawlers not to index or follow links on that page.

I hope this post helped you learn how to block unwanted web crawlers from accessing your site. You may also want to check out other articles on our blog, like our guide on canonical tags or our list of the best WordPress plugins.

If you found this article helpful, then please subscribe to our YouTube Channel. You’ll find many more helpful tutorials there. You can also follow us on X (Twitter), LinkedIn, or Facebook to stay in the loop.

Disclosure: Our content is reader-supported. This means if you click on some of our links, then we may earn a commission. We only recommend products that we believe will add value to our readers.