Want to know how to stop bot traffic from messing with your site’s performance?

If you’ve noticed unusual activity on your website—like sudden spikes in traffic from unfamiliar locations, strangely high bounce rates, or a sluggish user experience—you might be dealing with bot traffic.

While some bots are harmless or even helpful, many are malicious and can wreak havoc on your site’s performance, analytics, and security.

Bot traffic can skew your analytics data, overload your servers, and even expose your site to security threats like data breaches or DDoS attacks. Understanding how to identify and stop bad bot traffic is essential for maintaining a healthy and efficient website.

In this guide, I’ll walk you through how to stop bot traffic from interfering with your site’s performance.

Spoiler alert: You can tackle bot traffic issues directly from your WordPress dashboard using the right tools.

Let’s dive in and take back control of your site!

What is Bot Traffic?

First things first—what exactly is bot traffic?

Bot traffic refers to non-human visitors accessing your website. Bots are software applications that perform automated tasks over the Internet. They come in various forms and serve different purposes.

Some bots are beneficial, helping your website get indexed by search engines or providing useful functionalities. However, not all bots are good. Malicious bots can cause serious problems, from slowing down your site to stealing sensitive information.

Understanding the different types of bots is crucial in managing bot traffic effectively.

Types of Bot Traffic

Let me break down the types of bot traffic into two categories: good bots and bad bots.

Good Bots

Good bots play a vital role in the internet ecosystem. Here are some examples:

- Search Engine Crawlers: Bots from search engines like Googlebot (Google) and Bingbot (Bing) crawl your site to index your content. This process is essential for your site to appear in search results.

- Digital Assistants: Bots used by digital assistants like Siri, Alexa, and Google Assistant fetch information from the web to answer user queries.

- Social Media Bots: Bots from social platforms like Facebook and X (Twitter) that fetch preview data when your content is shared.

- Monitoring Tools: Services like uptime monitors and performance analyzers use bots to check if your site is online and performing well. A popular example is Screaming Frog.

These bots follow standard protocols, respect your site’s robots.txt file, and generally don’t negatively impact your website.

Bad Bots

Unfortunately, not all bots are friendly. Bad bots can harm your site in various ways. Some common types include:

- Spam Bots: These bots post spam comments or form submissions, cluttering your site with unwanted content.

- Scraping Bots: They steal your content or data, which competitors can use for malicious purposes.

- Credential Stuffing Bots: Bots that attempt to log into user accounts using stolen username and password combinations.

- DDoS Attack Bots: Distributed Denial of Service attack bots flood your server with requests, causing your site to slow down or crash.

- Click Fraud Bots: They generate fake clicks on ads, draining your advertising budget and skewing your campaign data.

- Inventory Hoarding Bots: Bots that add products to carts or reserve tickets without completing purchases, affecting your inventory management.

These bots often ignore standard protocols and can be difficult to detect and block.

Why Stopping Unwanted Bot Traffic Matters

You might be thinking, “Is bot traffic really that big of a deal?”

If it’s bad bots, Absolutely. Here’s why:

Protecting Website Performance

Bad bots can consume significant server resources, leading to slower load times or even downtime. This affects user experience and can harm your SEO rankings.

Protecting website performance also helps conserve energy, resulting in a smaller carbon footprint.

Ensuring Accurate Analytics

Bot traffic can skew your analytics data. When you rely on this data to make decisions about content, marketing strategies, or user experience improvements, inaccurate data leads to poor choices.

Safeguarding User Trust and Business Reputation

Security breaches erode user trust. If customers feel their data isn’t safe with you, they’ll take their business elsewhere. Plus, a reputation for poor security can be hard to shake.

How to Stop Unwanted Bot Traffic on Your Site [WordPress Edition]

Now, let’s get into the practical steps to stop bot traffic on your WordPress site. If the above information seems overwhelming and too technical, don’t worry. The solution I’ll show you today is so easy to use. And it only takes a few minutes and a couple of clicks to implement.

Plus, you don’t even need any coding or technical knowledge.

Sound too good to be true?

That’s exactly what I thought before using this method.

In this section, I’ll show you how to use the All in One SEO (AIOSEO) plugin to manage bot traffic effectively.

Step 1: Install All In One SEO (AIOSEO)

The first step to stopping bot traffic on your site (the easy way) is to install All In One SEO (AIOSEO).

AIOSEO is the best WordPress SEO plugin on the market. Over 3 million savvy website owners and marketers trust it to help them dominate the SERPs (search engine results pages) and drive relevant site traffic.

The plugin has many powerful SEO features and modules to help you optimize your site for search engines and users, even without coding or technical knowledge. Regarding blocking unwanted bots or crawlers, AIOSEO has an advanced feature called Crawl Cleanup. But more about this in a moment.

Need step-by-step instructions on how to install AIOSEO?

Then, check our detailed installation guide.

Step 2: Open Crawl Cleanup

Once AIOSEO is installed and activated, it’s time to access the powerful Crawl Cleanup feature. To do that, in your WordPress dashboard, go to AIOSEO settings » Search Appearance » Advanced.

Next, scroll down to the Crawl Cleanup toggle and ensure it’s set to “On”.

Crawl Cleanup has many settings to help you optimize your site’s crawlability and performance. Examples include settings for:

- RSS Feeds

- Unwanted Bots

- Internal Site Search Cleanup

The Crawl Cleanup feature helps you control bot access, reduce server load, and improve site performance.

In our case, however, we want the Unwanted Bots option.

Step 3: Select the Web Crawlers You Want to Block

Once you’ve clicked on the Unwanted Bots tab, you’ll be presented with several options. Now, let me show you how to configure which bots to block, and it’s surprisingly easy!

All you have to do is select the bots you want to block.

Depending on what you want to achieve, you can:

- Block all unwanted bots, giving you more control over your crawl budget.

- Primarily target AI crawlers, preventing them from indexing your content without permission.

As a result, you can protect your site from content scraping. You also save server resources, which also helps save the planet as less energy is used.

These changes will modify your robots.txt file, adding disallow directives for the chosen bots. To avoid errors, AIOSEO displays a yellow alert whenever you add a bot to block. This way, you can review and track updates.

Step 4: Edit robots.txt (Optional)

Want more advanced control over bot traffic?

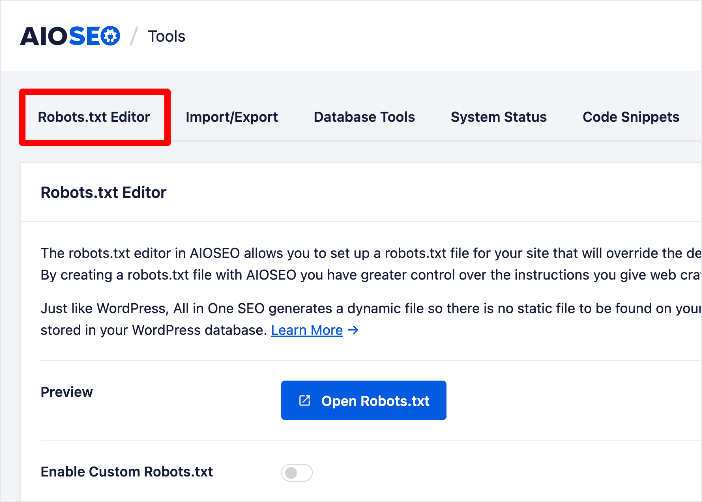

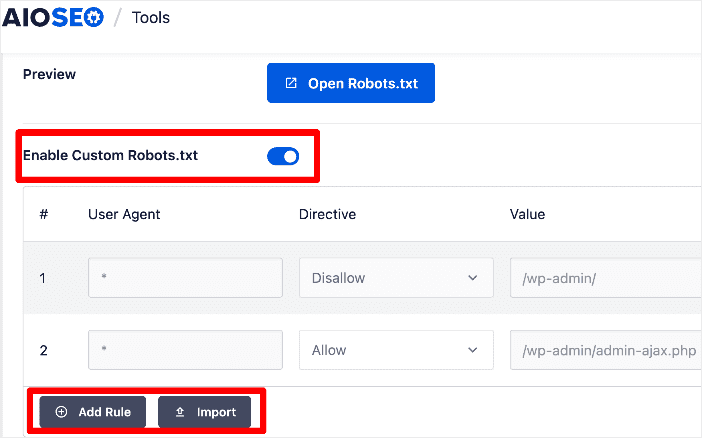

Another option is to set crawl directives in your site’s robots.txt file directly within AIOSEO. To access the robots.txt editor, go to the All in One SEO menu » Tools » Robots.txt Editor.

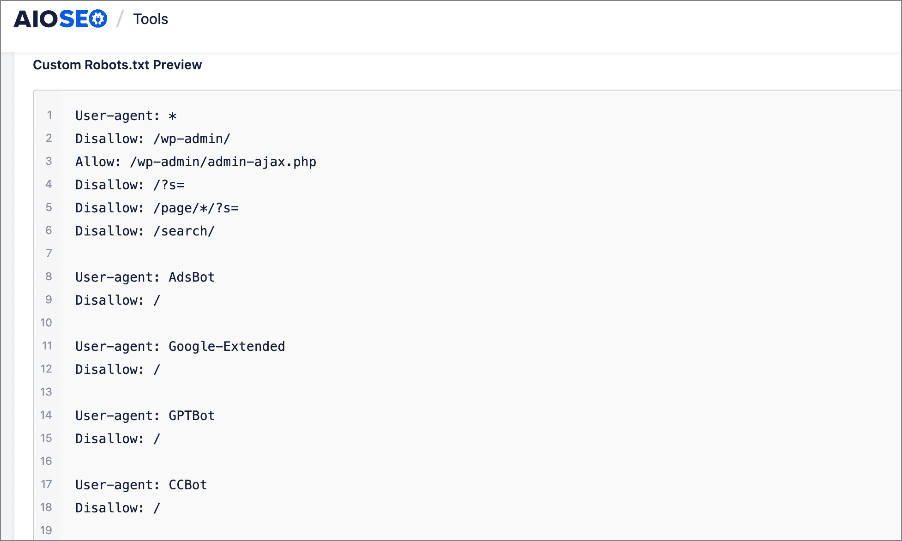

The robots.txt file tells bots which parts of your site they can or cannot access. You can add directives to disallow bots from accessing specific directories or files.

You can even import directives from another site, making it very easy to apply directives from one of your other sites or a site you like. Here’s an example below:

Note:

- robots.txt is public: Anyone can view your robots.txt file. Don’t include sensitive information.

- Not All Bots Obey robots.txt: Malicious bots often ignore these directives. For them, you’ll need additional measures like security plugins or server-level blocks.

That’s it!

You now know how to stop bot traffic from messing with your site’s performance.

How to Stop Bot Traffic on Your Site: Your FAQs Answered

What is bot traffic?

Bots are automated software applications that can be either beneficial, such as helping with search engine indexing, or malicious, causing issues like slowing down the site or stealing sensitive information.

Can bots ignore robots.txt?

Yes, unfortunately, many malicious bots ignore robots.txt directives. That’s why it’s important to use additional defenses like security plugins and WAFs (Web Application Firewalls).

Will blocking bots affect my SEO?

Blocking good bots, like Google’s crawler, can harm your SEO by preventing your site from being indexed. Always double-check which bots you’re blocking to ensure you’re only stopping malicious ones.

Is there a way to block bots without a plugin?

Yes, you can block bots by editing your .htaccess file or by configuring your server’s firewall. However, these methods require technical knowledge and can be risky. Misconfigurations can lead to your site becoming inaccessible.

We hope this post helped you learn how to stop unwanted bot traffic from messing with your site’s performance. You may also want to check out other articles on our blog, like our guide on robots.txt files or our tutorial on tracking your keyword rankings in WordPress.

If you found this article helpful, then please subscribe to our YouTube Channel. You’ll find many more helpful tutorials there. You can also follow us on X (Twitter), LinkedIn, or Facebook to stay in the loop.

Disclosure: Our content is reader-supported. This means if you click on some of our links, then we may earn a commission. We only recommend products that we believe will add value to our readers.